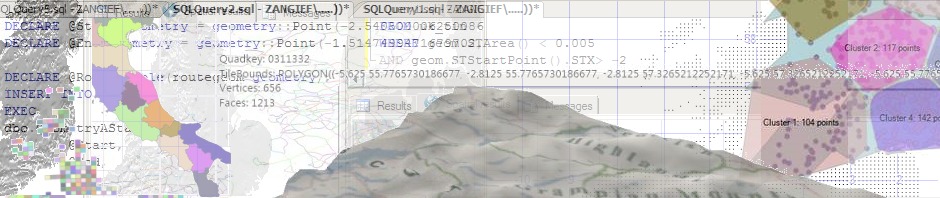

If you’ve tried dabbling with shaders at all, you’ve probably come across ShaderToy – an online shader showcase with some pretty amazing examples of what’s possible in a few lines of shader code, inspired greatly by classic demoscene coding. Here’s just two examples:

“Seascape” by TDM |

“Elevated” by iq |

It’s an amazing resource, not only for inspiration but for learning how to create shaders, since every example comes with full source code which you can edit and immediately test online in your browser, alter parameters, supply different inputs etc.

The shaders exhibited on ShaderToy are exclusively written in GLSL, and run in your browser using WebGL. I thought it might be fun to keep my shader skills up to scratch by converting some of them to Cg / HLSL for use in Unity. I’m using Unity 5 but, to the best of my knowledge, this should work with any version of Unity.

GLSL –> HLSL conversion

It would probably be possible to write an automatic conversion tool to turn a GLSL shader into an HLSL shader, but I’m not aware of one. So I’ve been simply stepping through each line of the shader and replacing any GLSL-specific functions with the HLSL equivalent. Fortunately, many functions are the same, others have direct equivalents and, as a shader is typically less than 100 lines long, what sounds like a manual process only takes a few minutes in practice.

Microsoft have published a very useful reference guide here which details many of the general differences between GLSL and HLSL. Unity also have a useful page here. The following is a list of specific issues I’ve encountered when converting Shadertoy shaders to Unity:

- Replace iGlobalTime shader input (“shader playback time in seconds”) with _Time.y

- Replace iResolution.xy (“viewport resolution in pixels”) with _ScreenParams.xy

- Replace vec2 types with float2, mat2 with float2x2 etc.

- Replace vec3(1) shortcut constructors in which all elements have same value with explicit float3(1,1,1)

- Replace Texture2D with Tex2D

- Replace atan(x,y) with atan2(y,x) <- Note parameter ordering!

- Replace mix() with lerp()

- Replace *= with mul()

- Remove third (bias) parameter from Texture2D lookups

- mainImage(out vec4 fragColor, in vec2 fragCoord) is the fragment shader function, equivalent to float4 mainImage(float2 fragCoord : SV_POSITION) : SV_Target

- UV coordinates in GLSL have 0 at the top and increase downwards, in HLSL 0 is at the bottom and increases upwards, so you may need to use uv.y = 1 – uv.y at some point.

Note that ShaderToys don’t have a vertex shader function – they are effectively full-screen pixel shaders which calculate the value at each UV coordinate in screenspace. As such, they are most suitable for use in a full-screen image effect (or, you can just apply them to a plane/quad if you want) in which the UVs range from 0-1.

But calculating pixel shaders for each pixel in a 1024×768 resolution (or higher) is *expensive*. One solution if you want to achieve anything like a game-playable framerate is to render the effect to a fixed-size rendertexture, and then scale that up to fill the screen. Here’s a simple generic script to do that:

using System;

using UnityEngine;

[ExecuteInEditMode]

public class ShaderToy : UnityStandardAssets.ImageEffects.ImageEffectBase

{

public int horizontalResolution = 320;

public int verticalResolution = 240;

// Called by camera to apply image effect

void OnRenderImage (RenderTexture source, RenderTexture destination)

{

// To draw the shader at full resolution, use:

// Graphics.Blit (source, destination, material);

// To draw the shader at scaled down resolution, use:

RenderTexture scaled = RenderTexture.GetTemporary (horizontalResolution, verticalResolution);

Graphics.Blit (source, scaled, material);

Graphics.Blit (scaled, destination);

RenderTexture.ReleaseTemporary (scaled);

}

}

And finally, here’s an example of a ShaderToy shader converted to Unity. This one is “Bubbles” by Inigo Quiles:

// See https://www.shadertoy.com/view/4dl3zn

// GLSL -> HLSL reference: https://msdn.microsoft.com/en-GB/library/windows/apps/dn166865.aspx

Shader “Custom/Bubbles” {

SubShader {

Pass {

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

struct v2f{

float4 position : SV_POSITION;

};

v2f vert(float4 v:POSITION) : SV_POSITION {

v2f o;

o.position = mul (UNITY_MATRIX_MVP, v);

return o;

}

fixed4 frag(v2f i) : SV_Target {

float2 uv = -1.0 + 2.0*i.position.xy/ _ScreenParams.xy;

uv.x *= _ScreenParams.x/ _ScreenParams.y ;

// Background

fixed4 outColour = fixed4(0.8+0.2*uv.y,0.8+0.2*uv.y,0.8+0.2*uv.y,1);

// Bubbles

for (int i = 0; i < 40; i++) {

// Bubble seeds

float pha = sin(float(i)*546.13+1.0)*0.5 + 0.5;

float siz = pow( sin(float(i)*651.74+5.0)*0.5 + 0.5, 4.0 );

float pox = sin(float(i)*321.55+4.1);

// Bubble size, position and color

float rad = 0.1 + 0.5*siz;

float2 pos = float2( pox, -1.0-rad + (2.0+2.0*rad)*fmod(pha+0.1*_Time.y*(0.2+0.8*siz),1.0));

float dis = length( uv – pos );

float3 col = lerp( float3(0.94,0.3,0.0), float3(0.1,0.4,0.8), 0.5+0.5*sin(float(i)*1.2+1.9));

// Add a black outline around each bubble

col+= 8.0*smoothstep( rad*0.95, rad, dis );

// Render

float f = length(uv-pos)/rad;

f = sqrt(clamp(1.0-f*f,0.0,1.0));

outColour.rgb -= col.zyx *(1.0-smoothstep( rad*0.95, rad, dis )) * f;

}

// Vignetting

outColour *= sqrt(1.5-0.5*length(uv));

return outColour;

}

ENDCG

}

}

}

Pretty, isn’t it?

Nvidia’s cgc can take GLSL and output HLSL apparently. http://http.developer.nvidia.com/Cg/cgc.html

I’m not adept at writing shaders, so could you tell me if one can convert the seascape to Unity? Would that work? Since you told they’re all fullscreen fx, I’m not an ocean shader would work as it should in Unity ecosystem. =|

Yes – any of the shadertoys can be converted. Full screen fx / shaders differ only in how they interpret UV coordinates, and whether they can access anything already written to the render buffer.

I am having trouble converting this CGToy to Unity:

https://www.shadertoy.com/view/4tsXRH

Specifically with the Mul2 and the usage of the mul() function. Could you add a couple examples? 🙂

I am having trouble converting this CGToy to Unity:

https://www.shadertoy.com/view/4tsXRH

Specifically with the Mul2 and the usage of the mul() function. Could you add a couple examples? 🙂

edit: Now with email notification checked. Please remove the other reply.